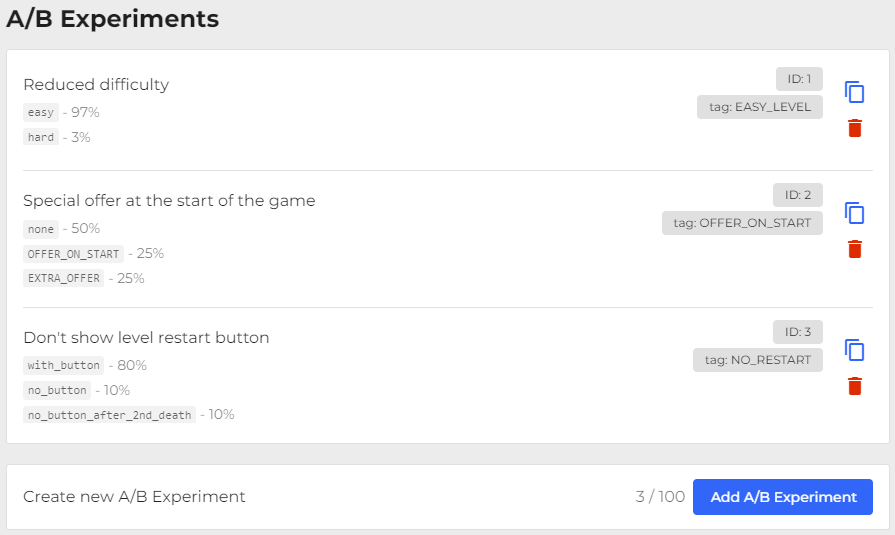

A/B Experiments

Concept

- The A/B experiments module will help you test different hypotheses to improve player experience and monetization of your project.

- Each experiment is divided into cohorts of participating players. A cohort is one of the groups of participants in the experiment that a player randomly falls into.

- You can specify the percentage of players that will be included in each cohort.

- The cohort is assigned to the player on the server automatically upon their first entry into the game. If you reshuffle the experiment, the player's group will change on their next visit. During gameplay, experiments are not changed out until the player logs in again to avoid inconsistencies.

- The cohort is assigned to the player's account. When using different devices, the cohort will be the same.

- Through the SDK, you have access to information about all the experiments in which the player participates and the cohorts they are in.

- You do not need to obtain information about experiments through a separate request; they are available after the player is auto-loaded at startup.

- The number of A/B experiments is limited: 10 for the basic usage plan and 100 for the premium usage plan.

- All experiments are automatically passed on to your analytics systems (Yandex.Metrica, Google Analytics) as visit parameters and event/reach goal parameters.

📄️ A/B Experiments API

Integrating experiments through the SDK. Methods of operation.

Add Experiments via Control Panel

In the A/B Experiments section of your project, you can create experiments.

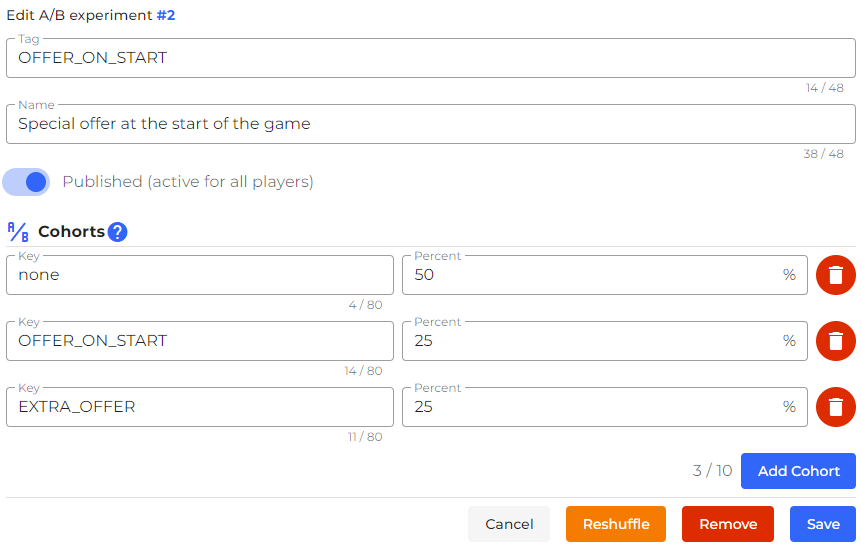

In the add form, you can:

- Specify a tag for SDK access.

- Specify a name for yourself to make it easier to navigate through the experiments.

- Specify visibility. For all players or only in testing mode.

- Specify the cohorts for the experiment.

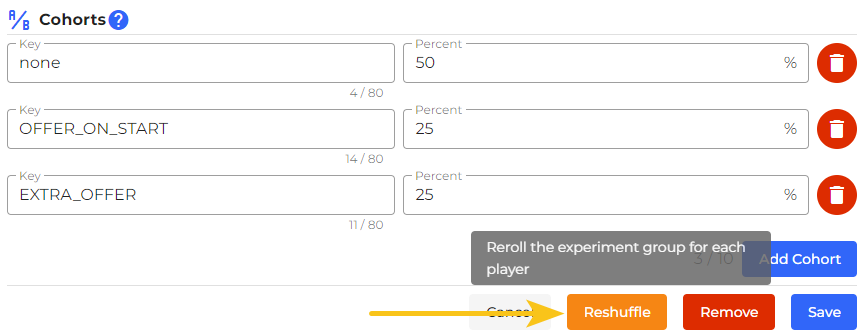

Reset Distribution Ratios / Reshuffle

You can reset the distribution of players among cohorts or reshuffle them. When a player enters the game, they will be assigned a new version of the experiment.

This allows you to:

- Test the hypothesis again with a different random distribution of players among cohorts. This way, you can check if the experiment results were due to an imbalance of players with certain qualities in one of the cohorts. For example, in an experiment hiding the "level restart" button, skilled players may have been included and they lost less and didn't even see this button.

- Temporarily switch players to the main version / roll back the experiment. If for some reason you cannot remove the experiment from publication, you can set the value of the main version to 100% and the remaining versions to 0% and click the reshuffle button to switch all players to the main version of the experiment.

- Finish the experiment without waiting for the release of a version with the experiment code removed. You can delete the code later and switch everyone to the desired version now. You can set the value of the winning version to 100% and the remaining versions to 0% and click the reshuffle button.

The reshuffle button is located inside the player editing form:

How the experiment is conducted

It is allowed to specify less than 100% in total for all cohorts. In this condition, the cohorts are distributed among 100%. If a player does not belong to any cohort, then an empty cohort is returned.

Example:

- C1 - 25%

- C2 - 25%

- The system adds the CEMPTY cohort - 50%

In this case, when a player belongs to an empty cohort, the value of the cohort in the SDK will be an empty string:

ss.experiments.has('OFFER_ON_START', '') // true

The chance of belonging to a cohort is distributed among the total sum of cohort percentages.

For example, if you specified 50% for each cohort:

- C1 - 50%

- C2 - 50%

- C3 - 50%

- Total 150%

This means that they all have an equal probability of 1/3.

Calculation example:

The system generates a random number in the range of 0 to 150.

It generates 114.

We determine which cohort the percentage belongs to:

- C1 - [0-50)

- C2 - [50-100)

- C3 - [100-150)

- Answer: C3

Track metrics through analytics systems

At the start, the SDK sends visit parameters to analytics systems indicating the player's experiments. This also happens with every goal/event sent to analytics.

For more information on setting up analytics systems, refer to the Analytics section.

With each action, parameters are sent in the format "SS_AB_yourtag": "cohort".

For example: "SS_AB_OFFER_ON_START": "sale_50".

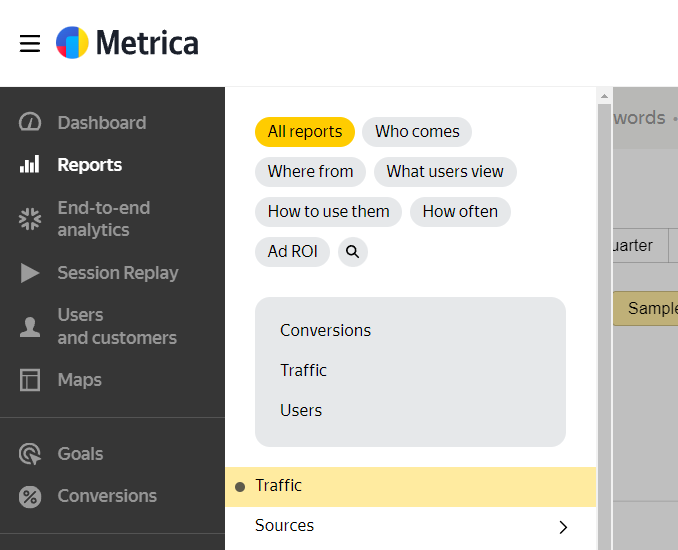

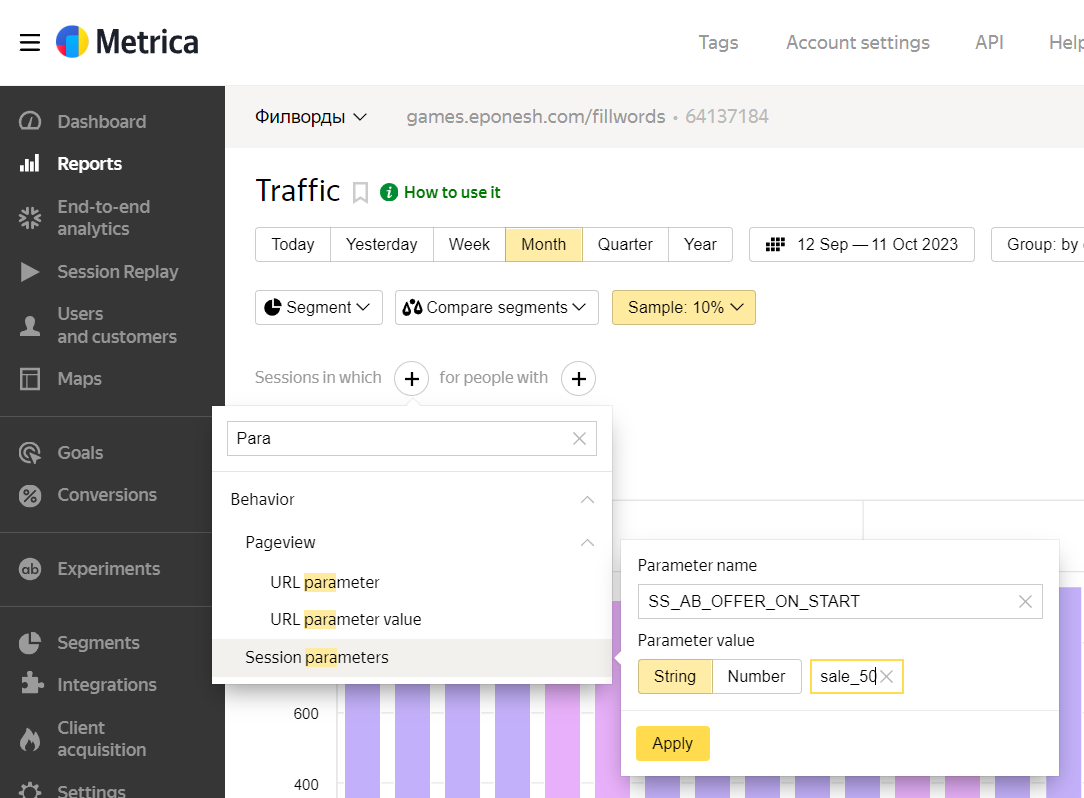

Yandex.Metrica

Go to Yandex.Metrica, select the counter for your game, and go to the Traffic section.

Select visit parameters for version A in the format:

- Parameter name:

SS_AB_yourtag - Parameter value (string):

cohort

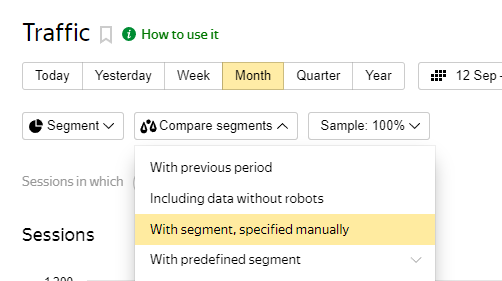

Then select Compare segments > With segment, specified manually.

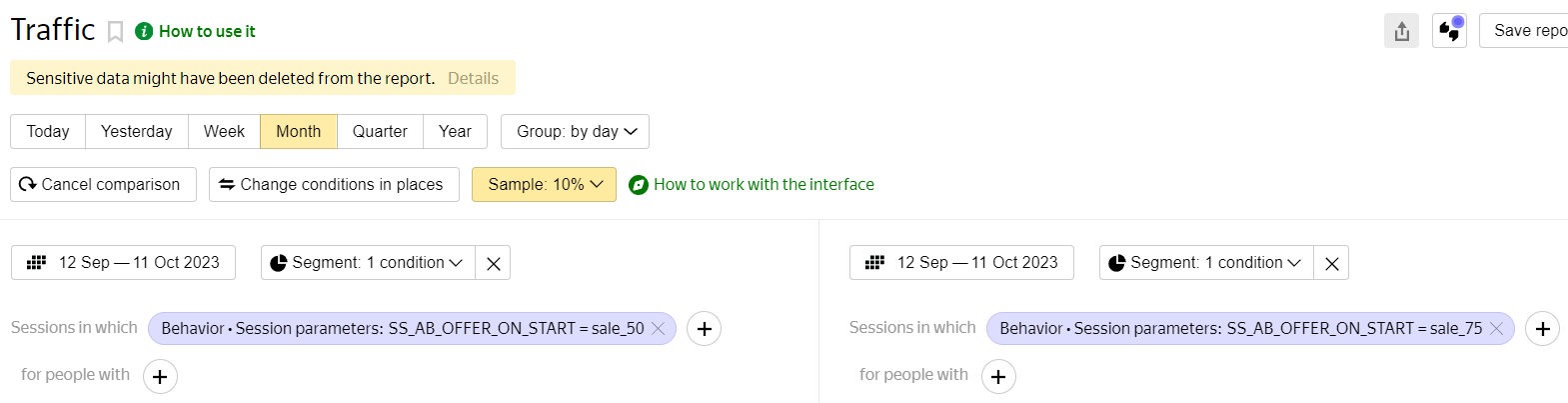

For the second variant, also specify the visit parameters. The result should look like this:

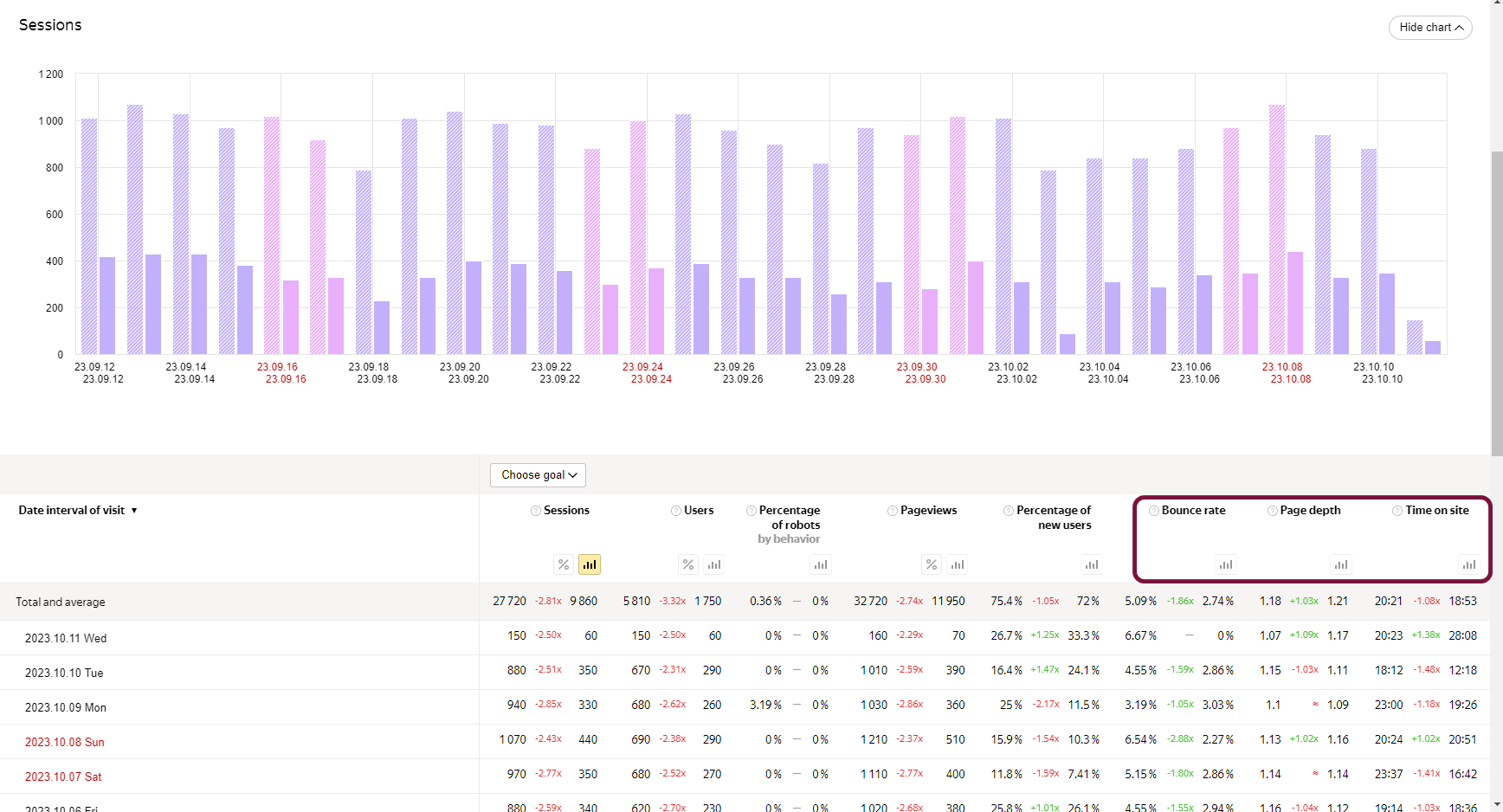

Now you can see how the experiment affected bounce rate, session duration.

You can also check how goal conversion rates have changed in the Conversions section by selecting the desired group and specifying visit parameters.

Google Analytics

Watch the video and guide on how to set up tracking of statistics from special event parameters.

https://support.google.com/analytics/answer/10075209

Getting Started

- Go to your project in the SpellSync Dashboard;

- Go to the A/B Experiments section;

- Add the first experiment;

- Familiarize yourself with working with experiments in the SDK and test the experiment cohorts.

Go to SDK documentation👇

📄️ A/B Experiments API

Integrating experiments through the SDK. Methods of operation.

Stay in Touch

Other documents of this chapter available Here. To get started, welcome to the Tutorials chapter.

SpellSync Community Telegram: @spellsync.

For your suggestions e-mail: [email protected]

We Wish you Success!